We’ll use multiple AI models, Vercel AI SDK, Supabase, and Serper API to

create an enterprise-grade AI assistant with generative UI, web browsing, and

image analysis capabilities.

Features

- Multiple AI Models: Switch between OpenAI, Claude, Groq, and others

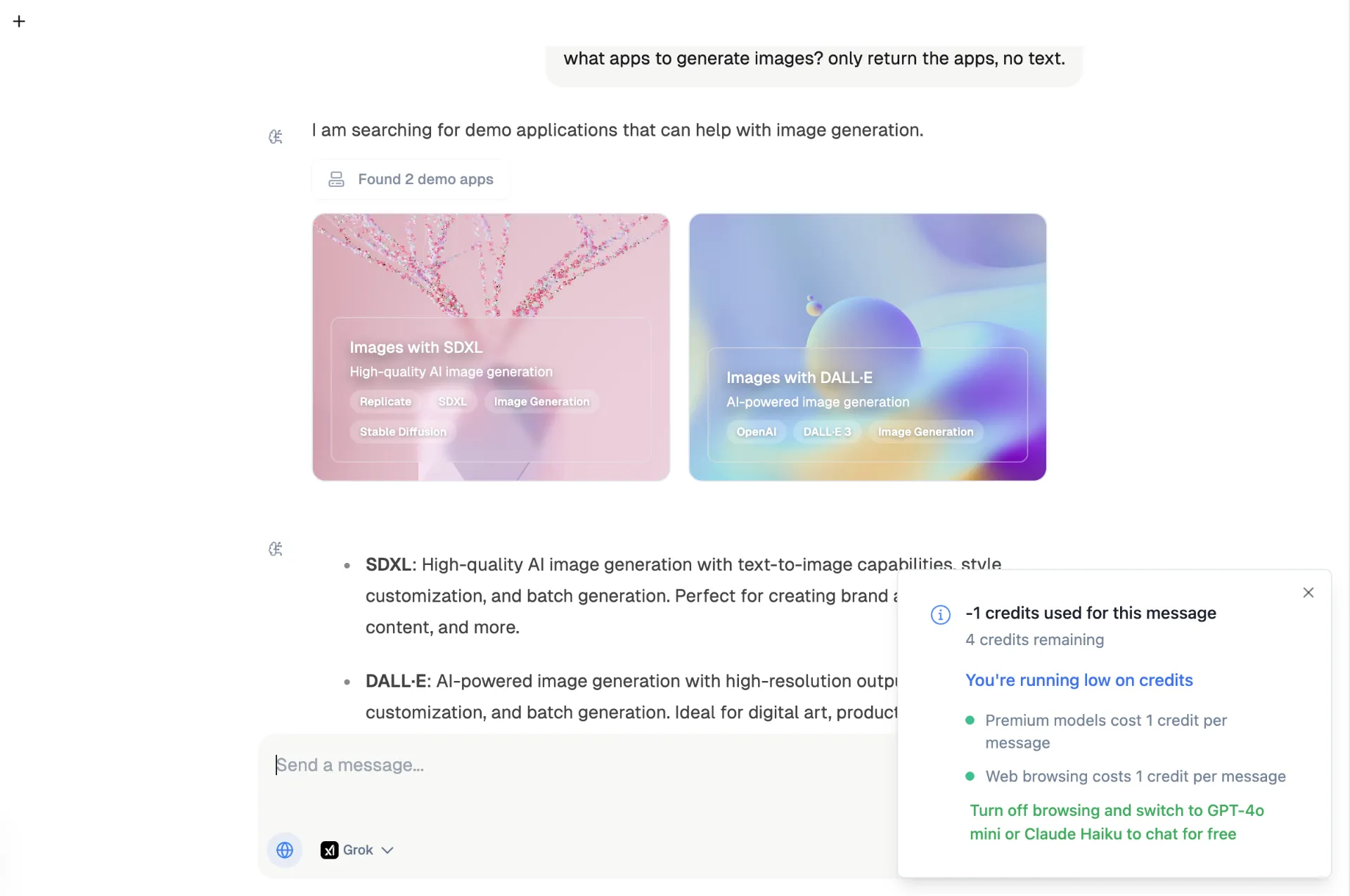

- Generative UI: Dynamic components and interactive app suggestions

- Image Analysis: Process and analyze images with vision models

- Web Search: Real-time internet access via Serper API

- Web Scraping: Extract content from URLs using Jina AI

- Document Generation: Create and edit documents in a Canvas interface

- Multi-language Support: Communicate in various languages

Pre-requisites

To build your advanced AI assistant, you’ll need to have several services set up. If you haven’t done so yet, please start with these:Supabase

Set up user authentication & PostgreSQL database using Supabase

Storage

Set up file storage using Cloudflare R2 for image uploads

OpenAI

Required for GPT models. Get API access from OpenAI

Groq API

Required for Llama models. Sign up and get API key.

xAI (Grok)

Required for Grok models. Get API access from xAI.

Anthropic

Required for Claude models. Get API access from Anthropic

Setting up Serper API

- Visit serper.dev

- Create an account and get your API key

- Add to your environment variables:

Web scraping is handled by Jina AI, which is free and doesn’t require an API

key.

Database Setup

The chat feature uses tables that should already exist if you followed the Quick Setup guide and ran the Supabase migrations. The required tables are:chats: Stores chat sessions and metadatamessages: Manages message history and contentimages: Handles image uploads and analysis resultschat_documents: Stores generated documents and versions

supabase/migrations/20240000000003_chat.sql

App Structure

The chat application is organized in a modular structure:-

API Routes (

app/api/(apps)/(chat)/*):chat/route.ts: Main chat endpoint with multi-model supportdocument/route.ts: Document generation and versioningimages/upload/route.ts: Image upload and analysishistory/route.ts: Chat history management

-

Chat App (

app/(apps)/chat/*):page.tsx: Main chat interface with model selectioninfo.tsx: App information and features displayprompt.ts: System prompts for different modestools.ts: Tool definitions (document, web browsing, app suggestions)toolConfig.ts: Feature flags and configurations

-

Components (

components/chat/*):widgets/: Generative UI components (app cards)

-

AI Configuration (

lib/ai/*):ai-utils.ts: Model provider integrationchat.ts: Message handling utilitiesmodels.ts: Available models configuration

Model Configuration

You can customize available models inlib/ai/models.ts:

lib/ai/models.ts

Vercel AI SDK Integration

The chat application uses the Vercel AI SDK for seamless AI model integration and streaming responses. This provides:- Unified interface for multiple AI providers

- Real-time streaming responses

- Built-in TypeScript support

- Efficient message handling

The Vercel AI SDK handles the complexity of managing different AI providers,

allowing us to switch between models seamlessly while maintaining a consistent

API interface.

Credit System

The chat application includes a flexible credit system to manage access to premium features:Features & Limitations

-

Free Features:

- Access to basic models (

gpt-4o-mini,claude-3-5-haiku,llama-3.1-70b) - Standard chat functionality

- Image analysis with free models

- Access to basic models (

-

Premium Features (require credits):

- Advanced AI models

- Web browsing capabilities

- Additional features as configured

Implementation

The credit system is implemented through two main components:app/api/(apps)/(chat)/chat/route.ts), which:

- Checks user’s available credits

- Validates feature access

- Deducts credits for premium usage

- Returns credit usage information in response headers

Credit Headers

The API returns credit usage information in thex-credit-usage header:

The credit system is designed to be flexible and can be easily modified or

replaced with your own payment/subscription system.